In the intricate realm of data security, tokenization has emerged as an effective method to protect sensitive information. “Understanding Tokenization and its Relation to PCI-DSS” demystifies the concept of tokenization and elucidates its vital link to the Payment Card Industry Data Security Standard (PCI-DSS). This insightful article acts as your guide, steering you through the technicalities and regulatory compliance of tokenization in relation to the PCI-DSS, offering a comprehensive view that can enlighten, inform and prepare you for a data secure environment.

Understanding Tokenization

Tokenization is a critical concept in the sphere of data security.

Definition and Functionality of Tokenization

Tokenization is a process that converts sensitive data into non-sensitive equivalents, known as tokens. A token has no exploitable meaning or value and hence, doesn’t expose sensitive information if breached. It’s primarily used to protect sensitive data like credit card numbers or Personally Identifiable Information (PII).

The Difference between Tokenization and Encryption

While both encryption and tokenization are methods of data protection, they differ in several ways. Encryption transforms data into another form or code so only people with access to a secret key (decryption key) can read it. On the other hand, tokenization replaces sensitive data with tokens which cannot be reversed without access to the tokenization system.

Tokenization Process Explained

During tokenization, the original data is replaced with a random string of characters. This string, or token, is meaningless on its own but is linked to the original data within a secure tokenization system. If a token needs to be reverted back to the original data (detokenization), it must be processed through the system that was initially used for tokenization.

Common Uses of Tokenization

Tokenization is commonly used in the payment industry to protect cardholder data. Online retailers use tokenization to store tokens instead of card details, improving data security. Tokenization is also utilized in healthcare to protect sensitive patient information.

Tokenization and Data Protection, A Practical View

Tokenization provides a robust security measure that protects against data breaches. If tokens are intercepted or stolen, they are useless without corresponding detokenization mechanism. Hence, tokenization is instrumental in protecting sensitive data during storage and transmission.

Understanding PCI-DSS

Payment Card Industry Data Security Standard (PCI-DSS) is an integral part of data protection procedures.

Explanation of PCI-DSS

PCI-DSS is a set of security standards designed to ensure that businesses processing card payments securely handle cardholder data. It applies to all entities involved in payment card processing.

The Significance of PCI-DSS to Businesses

Compliance with PCI-DSS is critical for maintaining trust with consumers, avoiding potential fines, and protecting against damaging data breaches. Non-compliance also impacts brand reputation and customer loyalty.

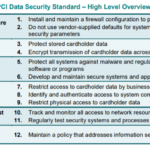

Key Requirements of PCI-DSS

There are twelve key requirements of PCI-DSS which can broadly be categorized into six major objectives: building and maintaining a secure network, protecting cardholder data, maintaining a vulnerability management program, implementing strong access control measures, monitoring and testing networks, and maintaining an information security policy.

Understanding the PCI-DSS Compliance Process

The process for achieving PCI-DSS compliance involves assessing the current payment environment, remedying vulnerabilities, and reporting compliance to the acquiring bank and card brands.

Relation Between Tokenization and PCI-DSS

Tokenization plays a significant role in achieving PCI-DSS compliance.

How Tokenization Helps in Achieving PCI-DSS Compliance

Tokenization reduces the amount of cardholder data that a company must manage, thereby reducing the scope of PCI-DSS compliance. Once data is tokenized, it can be handled without additional layers of security measures since it does not constitute cardholder data.

PCI-DSS’s Stance on Tokenization

PCI-DSS recognizes the role of tokenization in securing card data and has released guidelines to guide its usage. PCI-DSS mandates that businesses using tokenization must ensure they use a secure tokenization process, protect the integrity of the tokenization process, and validate their tokenization solution with relevant PCI-DSS requirements.

Examples Illustrating the Role of Tokenization in PCI-DSS

A retail business might tokenize cardholder data immediately upon entry, removing the need for storing sensitive card data on their systems. An online merchant may likewise use tokenization to securely store customers’ card data for recurring payments without storing actual card information, thereby reducing the data set exposed to potential breaches.

Benefits of Tokenization in PCI-DSS Compliance

Tokenization brings several benefits in the context of PCI-DSS compliance.

Reducing the Scope of PCI-DSS

By replacing sensitive data with tokens, the volume of sensitive data that an organization needs to protect is significantly reduced, thereby simplifying and narrowing the scope of PCI-DSS compliance.

Enhancing Data Security

Tokenization enhances data security by reducing the risk of data exposure. In the event of a data breach, stolen tokens would be useless as they can’t be converted back into sensitive data without the original tokenization system.

Preventing Data Breaches

Tokenization reduces the risk of data breaches by ensuring only non-sensitive tokens reside on the system, offering an additional level of protection even if the system security is compromised.

Improving Customer Trust and Loyalty

By adopting tokenization, businesses can showcase their commitment to data security, thereby enhancing consumer confidence and loyalty.

Challenges in Implementing Tokenization for PCI-DSS

While tokenization greatly aids in PCI compliance, its implementation may present some challenges.

Technical Challenges In Tokenization

Tokenization requires complex processes and systems. Integration with existing systems and ensuring the tokenization solution is compatible with other system tools can be technically challenging.

Operational Challenges

Organizational changes might be required for tokenization, such as training employees, updating policies and procedures, and managing the tokenization system.

Risk Management Challenges In Tokenization

Chances of insider threat and maintaining the integrity of the tokenization system are some of the risks to be managed adeptly.

Countermeasures to Overcome Tokenization Challenges

Countering these challenges involves conducting rigorous testing, continuous monitoring, and regular upgradation of the tokenization system. Employee training and regular audits can also help manage these risks effectively.

Future of Tokenization in PCI-DSS

Tokenization continues to play a significant role in PCI-DSS compliance.

The Trend of Tokenization in PCI-DSS Compliance

Tokenization is becoming a favored approach for data protection and PCI-DSS compliance. It allows organizations to replace cardholder data with meaningless tokens, thus achieving a high level of data security and a broader scope of compliance coverage.

Assessing the Impact of Regulatory Changes on Tokenization

As data security regulations evolve, tokenization will likely be even more relevant. Regulatory changes aimed at protecting consumer data are likely to further substantiate the use of tokenization in organizations.

Possible Evolution of Tokenization Technology

New technologies and innovations are expected to enhance the utility and functionality of tokenization. Advancements may include real-time tokenization and increased use of cloud-based tokenization solutions.

Best Practices for Tokenization Implementation

Proper implementation of tokenization is crucial for efficiency and effectiveness.

Choosing the Right Tokenization Solution

The right solution depends on several factors including nature of the business, its size, technical capabilities, and budget.

Ensuring Seamless Integration with Existing Systems

The tokenization solution must be integration-friendly with existing systems to ensure smooth operation and avoid disruptions or complications.

Ongoing Monitoring and Management of Tokenization System

Continuous monitoring and management is necessary both to ensure efficiency of the system and to identify and resolve potential issues promptly.

Regular Reviews and Updates of Tokenization Strategy

Regular review of the tokenization strategy is essential to ensure it remains effective, secure, and compliant with changing data security requirements.

Case Studies of Successful Tokenization Implementation

Real-world application of tokenization validates its effectiveness in PCI-DSS compliance.

Overview of Companies that Successfully Implemented Tokenization

Many businesses across industries have successfully implemented tokenization, achieving significant boost in their data security and PCI-DSS compliance.

Impact of Tokenization on their PCI-DSS Compliance

Companies implementing tokenization report a reduced scope of audit, enhanced data security and increased ease of compliance with PCI-DSS.

Key Takeaways from these Case Studies

The successful implementation of tokenization underlines its importance as a reliable, efficient, and proactive measure for data security and PCI-DSS compliance.

Choosing a Tokenization Vendor

Choosing the right vendor is a key factor in effective tokenization implementation.

Criteria for Selecting the Right Tokenization Service Provider

Key criteria include proven track record, compatibility with existing systems, specific needs of the business, cost-effectiveness, customer support, and array of services offered.

Comparison of Top Tokenization Vendors in the Market

Different vendors offer varying range of services. Comparative research of top vendors is essential to making an informed selection.

Best Practices in Vendor Management

This involves establishing clear expectations, maintaining effective communication, and monitoring vendor performance to ensure ongoing success.

Exploring Advanced Tokenization Techniques

Advanced methods of tokenization offer enhanced protection and versatility.

Multi-use Tokens and their Potential

These are tokens which can be used in multiple, unrelated systems providing even greater data protection capabilities.

Format Preserving Tokenization Technique

This generates tokens that maintain the form of the original data, but with randomized values, which can be useful in systems that require certain formatted data input.

The Role of Artificial Intelligence in Tokenization

AI can be utilized to improve and streamline the tokenization process, enabling automatic detection and tokenization of sensitive data.

Potential Future Developments in Tokenization Techniques

As tokenization evolves, expect to see advancements in speed, scalability, and versatility which will further augment its applicability in PCI-DSS compliance.